Paper Of The Week

Paper of the Week: “Adversarial Examples Are Not Bugs, They Are Features”

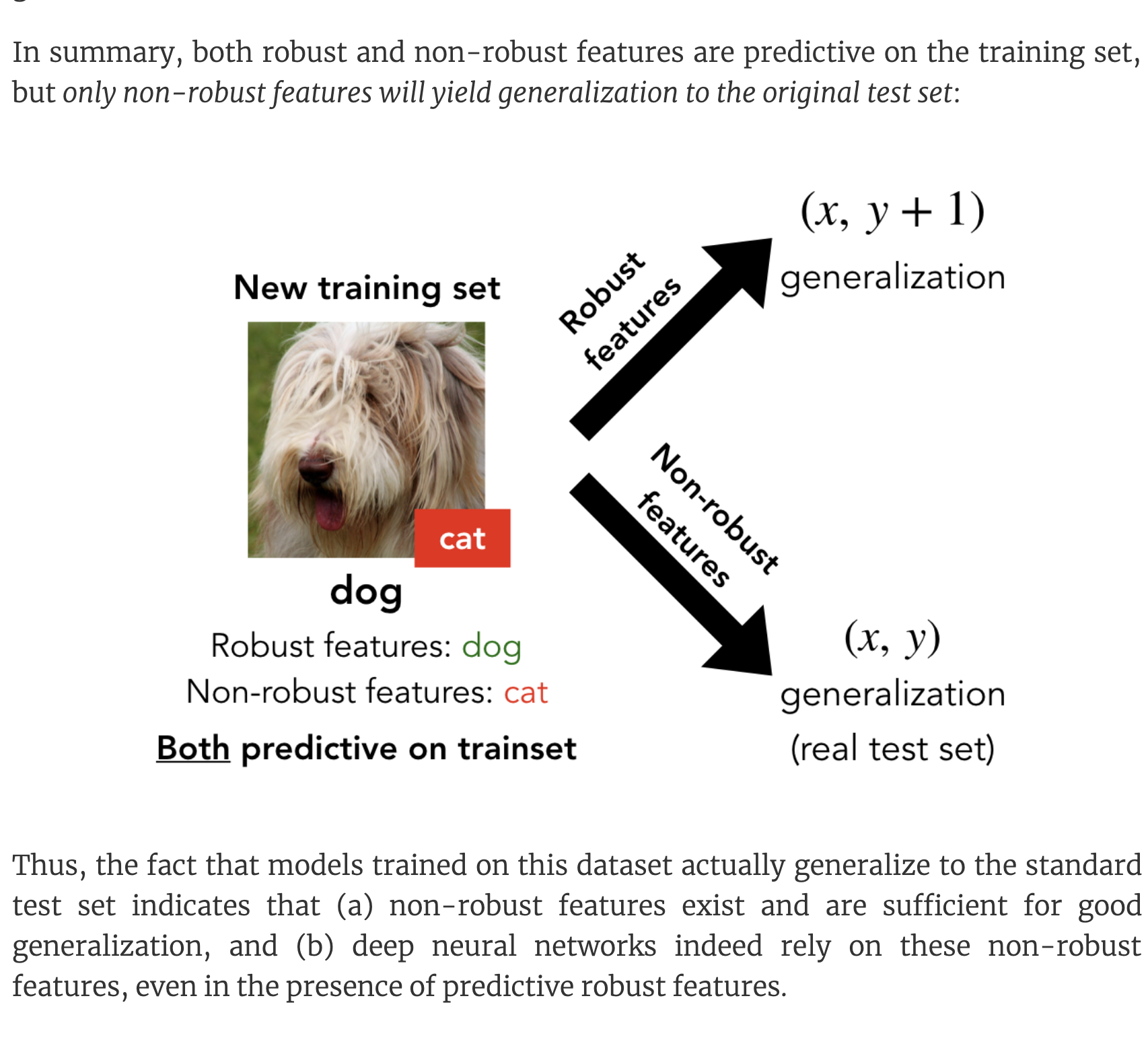

This paper proposes that “adversarial examples are due to ‘non-robust features’ which are highly predictive but imperceptible to humans”. That is, adversarial picture examples aren’t a sign that your original network has bugs – these examples contain predictive features, they just aren’t ones that are visible to us humans! I thought this initial paper was interesting and enjoyed the very meta title, but I REALLY liked the follow up discussion and replication on distill.pub.

Read the paper here

If you’re not sure you’re getting it, try reading some summaries here And catch the follow up discussion here